How the Australian Government manages its digital projects to support success

This section explains how digital projects are supported from the centre of government including through a world-leading investment management framework specifically designed to create the conditions digital projects need to succeed.

Reforms supporting success

Ensuring digital projects deliver expected benefits for Australians on time and on budget sits at the heart of each of the reforms highlighted throughout this report.

How digital projects are monitored and supported from the centre of government

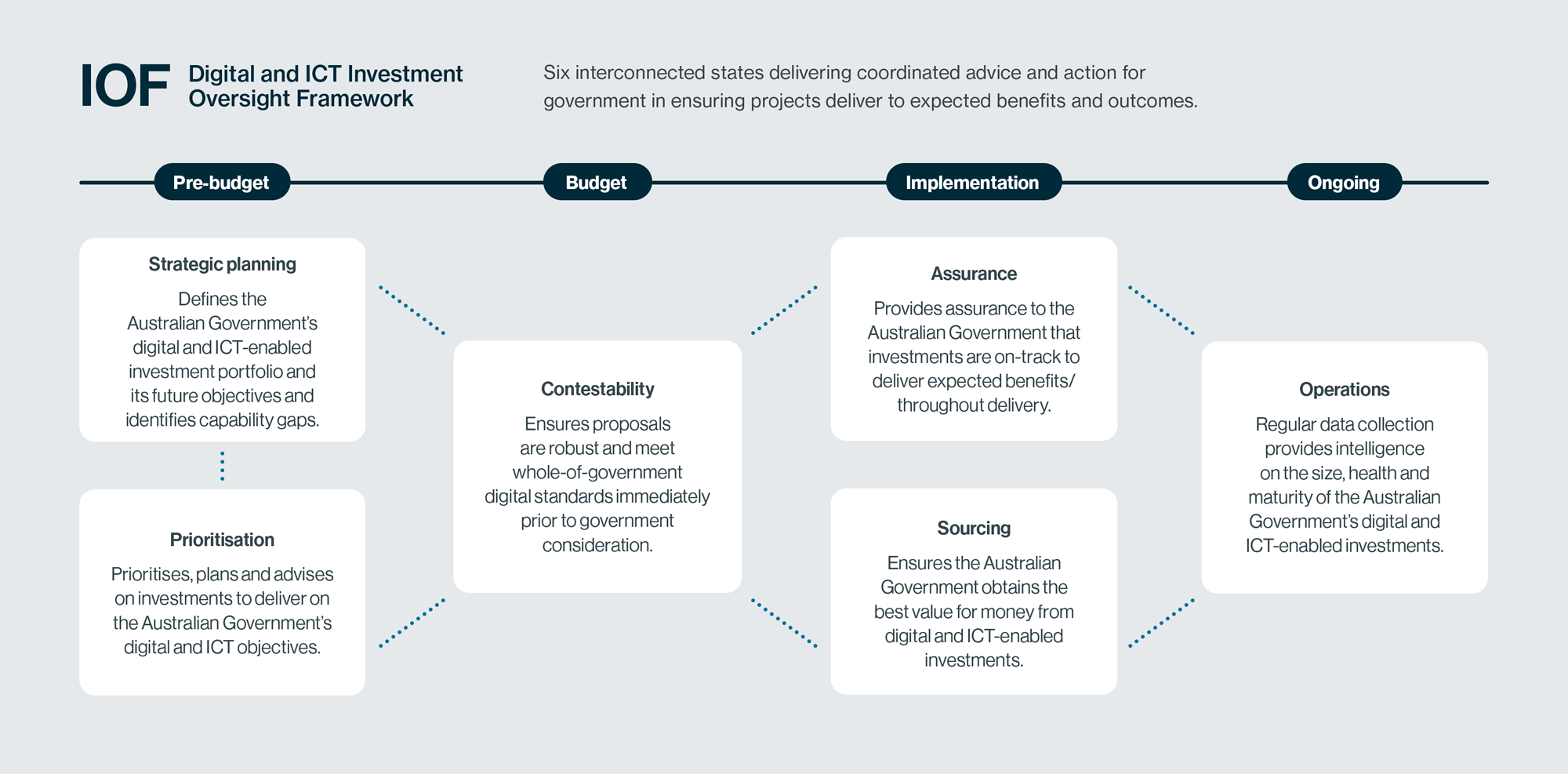

In the past year, more projects have come under central monitoring and oversight as part of the Australian Government’s Digital and ICT Investment Oversight Framework (IOF).

This world-class framework is designed to ensure digital projects are strategically aligned, carefully prioritised, meet digital policies and standards, and realise expected benefits for Australians.

The IOF starts with setting a clear strategic direction, which is then reinforced throughout the lifecycle of project design, funding and implementation. Throughout this lifecycle, best-practice digital policies and standards set clear requirements with agencies supported to meet these requirements by the DTA.

Image description

IOF Digital and ICT Investment Oversight Framework.

Six interconnected states delivering coordinated advice and action for governmentin ensuring projects deliver to expected benefits and outcomes. The diagram shows four headlines with various states of the IOF below them as subheadings.

- Image headline: Pre-budget

- Subheading: Strategic planning: Defines the Australian Government’s digital and ICT-enabled investment portfolio and its future objectives and identifies capability gaps.

- Subheading: Prioritisation: Prioritises, plans and advises on investments to deliver on the Australian Government’s digital and ICT objectives.

- Image headline: Budget

- Subheading: Contestability: Ensures proposals are robust and meet whole-of-government digital standards immediately prior to government consideration.

- Image headline: Implementation

- Subheading: Assurance: Provides assurance to the Australian Government that investments are on-track to deliver expected benefits/ throughout delivery.

- Subheading: Sourcing: Ensures the Australian Government obtains the best value for money from digital and ICT-enabled investments.

- Image headline: Ongoing

- Subheading: Operations: Regular data collection provides intelligence on the size, health and maturity of the Australian Government’s digital and ICT-enabled investments

Reforms supporting success – enabling project success through good assurance

Since 2021, the Australian Government has invested in strengthening central oversight of digital projects. This central oversight works to ensure best practice is systematically applied as digital projects are designed and delivered across agencies. By driving the adoption of best practice, central oversight plays a key role in giving each digital project the best chance of success.

The Assurance Framework for Digital and ICT Investments mandates global best practice in the use of assurance for digital projects. While assurance doesn’t in itself deliver outcomes, effective assurance is critical to good governance and decision-making. All projects in this report are subject to the Assurance Framework and must apply its ‘key principles for good assurance’. These principles draw on global best practice and, when applied effectively, provide confidence that digital projects will achieve their objectives, without leading to excessive levels of assurance.

The Assurance Framework also includes escalation protocols to support agencies to resolve delivery challenges digital projects might encounter. Central oversight of assurance also ensures that lessons learned from across digital projects are systematically incorporated into the design and delivery of future projects to reduce the risk of delivery issues arising in future.

Reforms supporting success – ensuring digital projects are well designed

The DTA works with agencies to ensure robust and defensible proposals for spending on all new digital projects.

Each proposal must align with the government’s strategies, policies and best practice digital standards as part of the Digital Capability Assessment Process (DCAP).

For complex, high-risk and high-cost digital projects, the DTA offers additional support through the ICT Investment Approval Process (IIAP). This involves working with agencies to develop and mature implementation planning to support success. A comprehensive business case must clearly demonstrate the need for funding, based on thorough policy development, a well-planned approach to delivery and mechanisms for reviewing project progress. This process aids government decision-making on whether to fund large and complex digital project proposals.

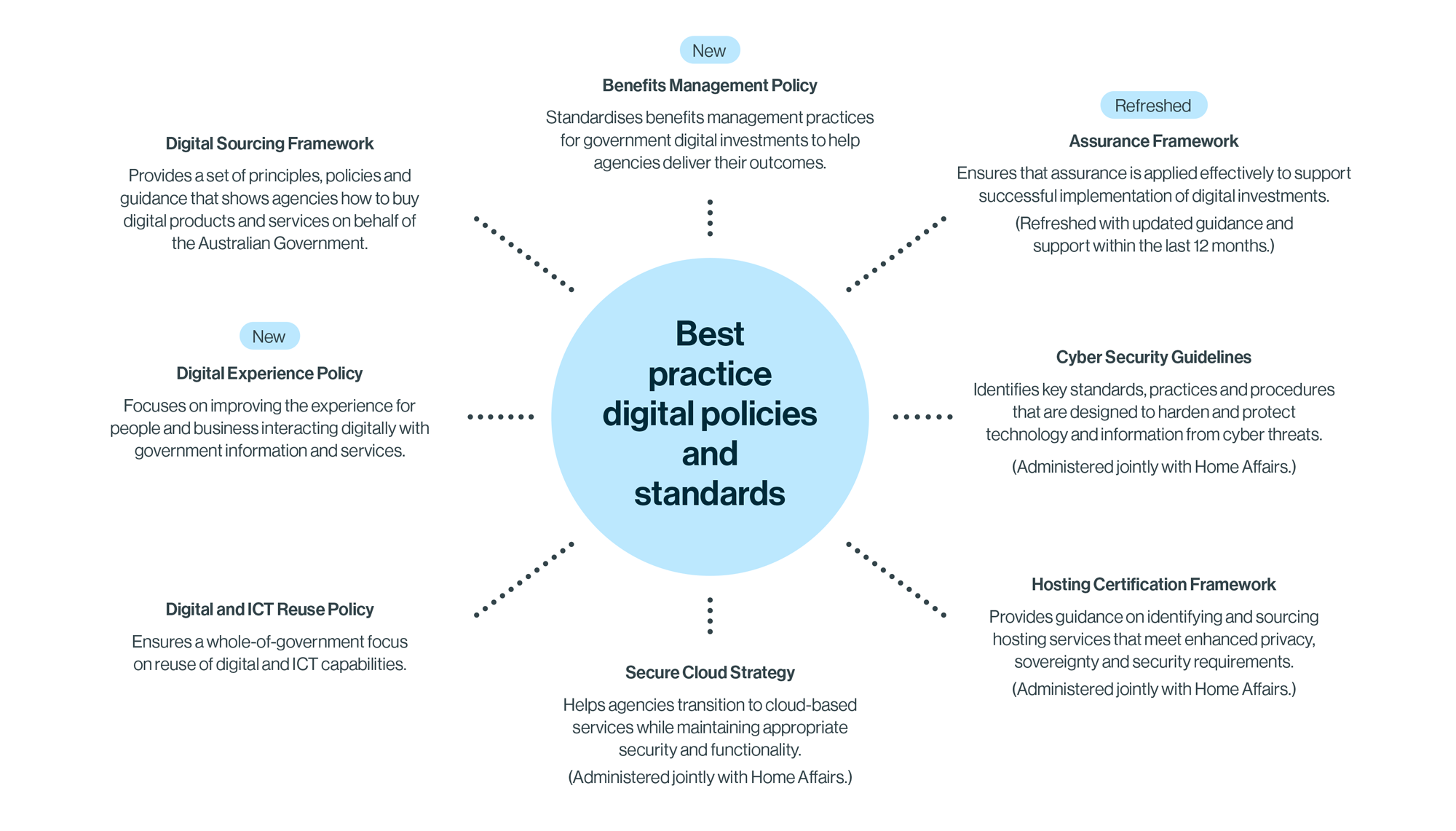

The DTA’s digital policies and standards codify best practice and ensure digital projects are positioned to succeed

The policies that apply to digital projects in the Australian Government are constantly being reviewed and updated. This is necessary to ensure they best support agencies in delivering the world-class data and digital capabilities needed to support the missions set out in the Data and Digital Government Strategy.

Image description

The image shows a central circle with lines leading off to the subheadings.

Image headline (in the centre): 'Best Practice Digital Policies and Standards'

Sub headings:

- Secure Cloud Strategy

- Helps agencies transition to cloud-based services while maintaining appropriate security and functionality.

- (Administered jointly with Home Affairs)

- Hosting Certification Framework

- Provides guidance on identifying and sourcing hosting services that meet enhanced privacy, sovereignty and security requirements.

- (Administered jointly with Home Affairs

- Cyber Security Guidelines

- Identifies key standards, practices and procedures that are designed to harden and protect technology and information from cyber threats.

- (Administered jointly with Home Affairs).

- Assurance Framework (Refreshed)

- Ensure that assurance is applied effectively to support successful implementation of digital investments.

- (Refreshed with updated guidance and support within the last 12 months)

- Benefits Management Policy (New)

- Standardises benefit management practices for government digital investments to help agencies deliver their outcomes.

- Digital Sourcing Framework

- Provides a set of principles, policies and guidance that shows agencies how to buy digital products and services on behalf of the Australian Government.

- Digital Experience Policy (New)

- Focuses on improving the experience for people and businesses interacting digitally with government information and services.

- Digital and ICT Reuse Policy

- Ensures a whole of government focus on reuse of digital and ICT capabilities.

Reforms supporting success – improving benefits management capability

The Australian Government’s Benefits Management Policy for Digital and ICT-Enabled Investments requires agencies to use best practice benefits management for their digital projects. Projects must identify measurable benefits with clear baselines and targets before funding decisions are taken. The minimum policy requirements are adjusted based on project tier, but all projects must focus on securing benefits in addition to preventing cost and schedule overruns.

The DTA oversees the realisation of benefits and identifies emerging risks across digital projects. We also focus on providing advice, support and training to improve public service capabilities.

Investment in this area aims to ensure that digital projects deliver anticipated benefits to the government and Australians.