-

Page not found. The link is broken, or the page has been moved.

From here, you can:

Popular content

-

digital.gov.au accessibility statement

We’ve designed our website to meet the Australian Government standard for web accessibility. These include the Web Content Accessibility Guidelines (WCAG) 2.2.

If you have any feedback, suggestions or problems accessing a page or using the website, please email us at digitalchannels@dta.gov.au or phone either 0460 434371, 0487 687410.

Services to help you

- Hearing or speech disability: To contact the Digital Channels team, phone the National Relay Service at (02) 6120 8426 or email info@dta.gov.au.

- Languages other than English: If you need an interpreter, phone the Translating and Interpreting Service (TIS National) at 131 450. They will connect you to the Digital Channels team on 02 6120 8707.

-

digital.gov.au incorporates new design elements, components and widgets to simplify user experience and improve digital communications. This is an open, transparent test to encourage user input.

Tell us what you think and help us make it better.

-

Privacy Collection Notice

This feedback is designed for the anonymous collection of data; with no identifying information collected, unless volunteered by yourself; through the provision of the name or email field.

Should any identifiable information be provided; it will not be shared elsewhere, unless you have given consent for this, or it is authorised or required by law.

Note: All information received by the DTA is held in secure online systems, with access to this information restricted to staff on a need-to-know basis only. When personal information is no longer required to be retained as part of a Commonwealth record, it is generally destroyed in accordance with the Archives Act 1983(Opens in a new tab/window).

Off -

-

-

-

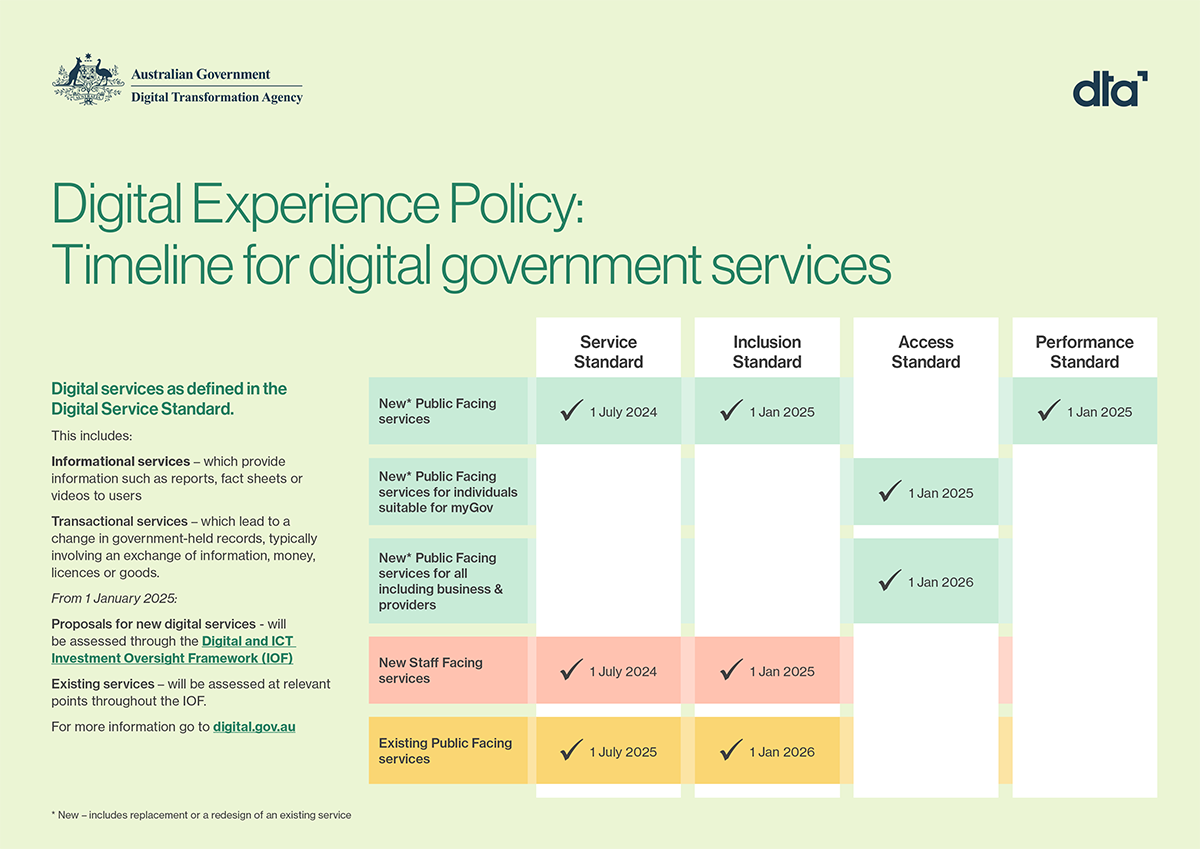

Digital Experience Policy Timeline

The Digital Experience Policy including the updated Digital Service Standard 2.0 and 3 new standards come into effect on the rolling timeline below.

Digital services as defined by the Digital Service Standard

This includes:

- Informational services – which provide information such as reports, fact sheets or videos to users.

- Transactional services – which lead to a change in government-held records, typically involving an exchange of information, money, licences or goods.

From January 1 2025:

- Proposals for new digital services – will be assessed through the Digital and ICT Investment Oversight Framework (IOF)

- Existing services – will be assess at relevant points throughout the IOF.

-

1 July 2024

Digital Service Standard 2.0 phase 1

- New public-facing and staff-facing services

- Digital Experience Policy comes into effect

Connect with the digital community

Share, build or learn digital experience and skills with training and events, and collaborate with peers across government.