Check the OECD.AI for any definition updates.

The framework applies regardless of whether an AI model or system is procured, built or otherwise sourced or adapted.

An AI use case is a covered AI use case if it has reached the ‘design, data and models’ AI lifecycle stage (see section 1.2 below and in the guidance) and if any of the following apply.

- The estimated whole‑of‑life cost of the project or service incorporating the use of AI is more than $10 million. This coverage is consistent with other whole-of-government digital policies to ensure agencies understand that AI is classified as a digital and ICT investment and must meet Investment Oversight Framework obligations.

- It is possible the use of AI will lead to more than insignificant harm to individuals, communities, organisations or the environment.

- The use of AI will materially influence decision-making that affects individuals, communities, organisations or the environment.

- It is possible the AI will either directly interact with the public or produce outputs that are not subject to human review prior to publication.

- It is deemed a covered AI use case by the DTA.

For the avoidance of doubt, you are not required to undertake an assessment under this framework if you are doing early-stage experimentation which does not:

- commit you to proceeding with a use case or to any design decisions that would affect implementation later

- commit you to expending significant resources or time

- risk harming anyone

- introduce or exacerbate any privacy or cybersecurity risks

- produce outputs that will form the basis of policy advice, service delivery or regulatory decisions.

However, you may still find the framework useful and choose to apply it. Agencies are encouraged to apply the framework as appropriate for AI use cases that are not covered. Early and iterative engagement with the framework throughout the lifecycle of an AI use case will assist you to identify, manage and mitigate risks more effectively.

Assessment process

Each use case assessment should be completed by a designated assessment contact officer who oversees the end-to-end assessment process. Assessment contact officers should first complete sections 1 to 3, then, depending on the outcome of the threshold assessment at section 3, proceed to complete a full assessment.

The assessment sections do not need to be completed in the numbered order – assessment contact officers may choose to work on sections concurrently and revisit sections as needed. Assessment contact officers will likely need to seek input from other staff in their agency to complete some sections, and in some cases may need external advice. Refer to the accompanying draft guidance material, which is intended as an interpretation aid.

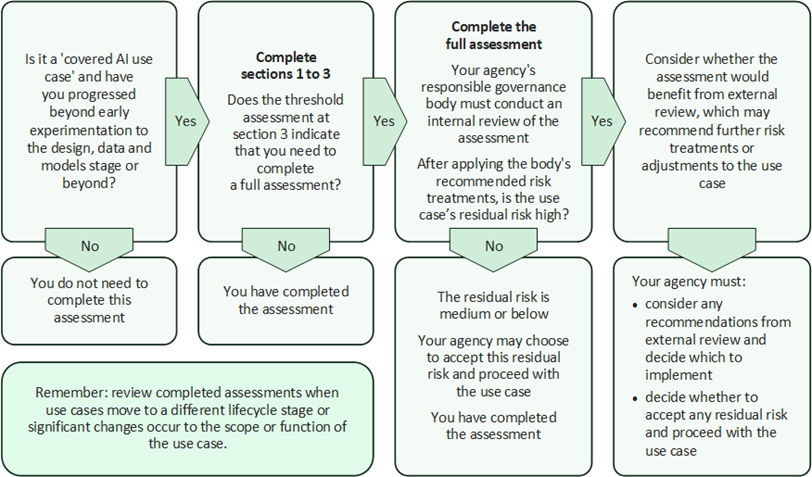

Below is a simplified process map for completing an assessment under this framework.

- Is it a ‘covered Al case' and have you progressed beyond early experimentation to the design, data and models stage or beyond?

- If no, you do not need to complete this assessment.

- If yes, complete sections 1 to 3 of the framework and proceed to question 2 below.

- Does the threshold assessment at section 3 of the framework indicate that you need to complete a full assessment?

- If no, you have completed the assessment.

- If yes, complete the full framework assessment and proceed to question 3 below.

- Your agency’s responsible governance body must conduct an internal review of the assessment. After applying the body’s recommended risk treatments, is the use case’s residual risk high?

- If no, the residual risk is medium or below. Your agency may choose to accept this residual risk and proceed with the use case. You have completed the assessment.

- If yes, proceed to question 4 below.

- Consider whether the assessment would benefit from external review, which may recommend further risk treatments or adjustments to the use case. Your agency must:

- consider any recommendations from external review and decide which to implement

- decide whether to accept any residual risk and proceed with the use case.

Remember to review completed assessments when use cases move to a different lifecycle stage or significant changes occur to the scope or function of the use case.

Image description

This image is a decision flowchart that shows how you progress based on your responses to the questions asked.

- Question one is 'Is it a "covered AI use case" and have you progressed beyond early experimentation to the design, data and models stage or beyond?' If no: 'you do not need to complete this assessment' If yes: proceed to question 2.

- Question two is 'Complete sections 1 to 3. Does the threshold assessment at section 3 indicate that you need to complete a full assessment?' If no: 'you have completed the assessment' If yes: proceed to question 3.

- Question three is 'Complete the full assessment. Your agency's responsible governance body must conduct an internal review of the assessment. After applying the body's recommended risk treatments, is the use case's residual risk high?' If no: 'the residual risk is medium or below. Your agency may choose to accept this residual risk and proceed with the use case. You have completed the assessment.' If yes: proceed to question four.

- Question four is 'Consider whether the assessment would benefit from external review, which may recommend further risk treatments or adjustments to the use case'. The outcome is 'Your agency must: consider any recommendations from external review and decide which to implement; decide whether to accept any residual risk and proceed with the use case'.

It also contains the note - "Remember: review completed assessments when use cases move to a different lifecycle stage or significant changes occur to the scope or function of the use case."

OffPilot AI assurance framework

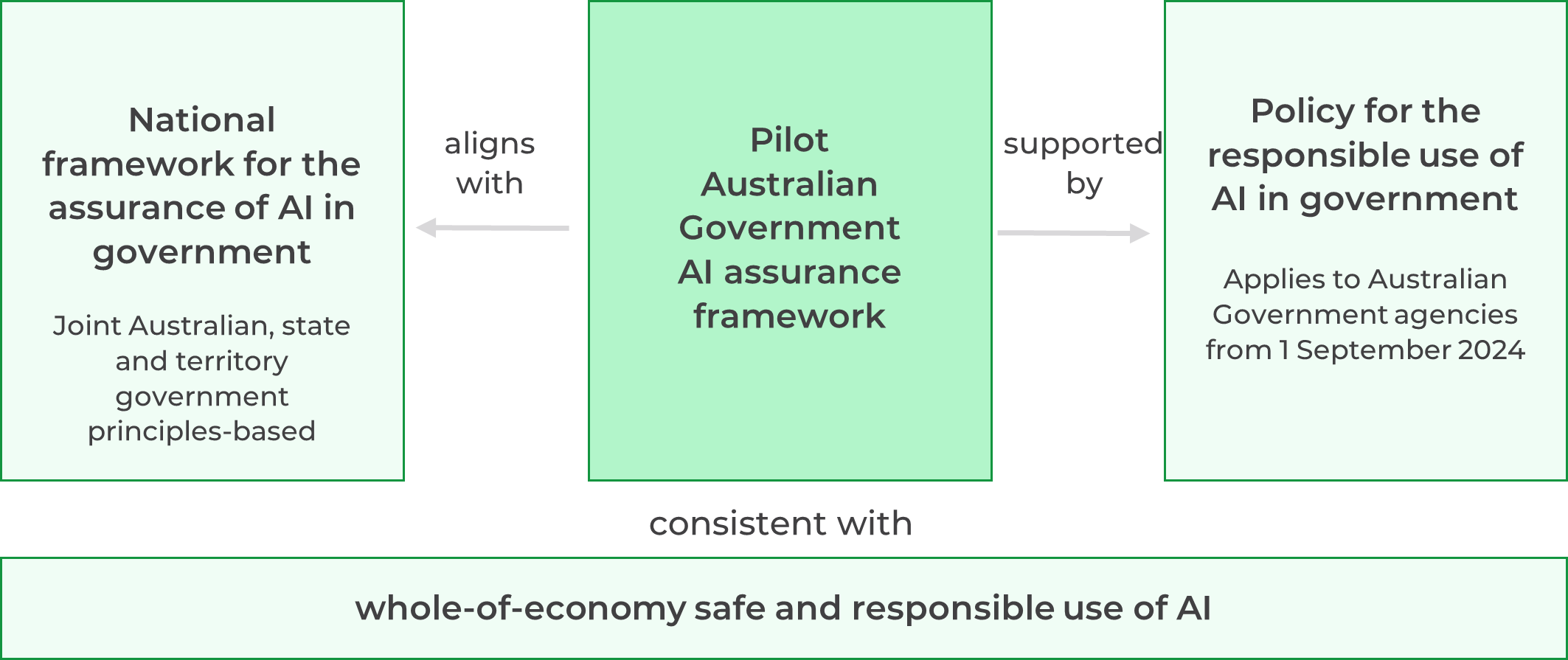

This is a draft document for Australian Government agencies participating in the Pilot Australian Government artificial intelligence (AI) assurance framework, led by the Digital Transformation Agency (DTA) from September to November 2024. Further practical advice on applying the framework is contained in accompanying guidance material.

The assurance framework’s impact assessment process is divided into 11 sections. The accompanying guidance document mirrors this structure, with each of its 11 sections corresponding to a section of the assurance framework.

The draft framework and guidance are subject to change based on feedback from pilot participants and other stakeholders. This pilot draft does not represent a final Australian Government position on AI assurance.

For further information on the framework and accompanying guidance, please email aistandards@dta.gov.au.

Join the Australian Public Service and make good things happen

From traineeships to full time, part time and temporary or non-ongoing roles across a range of locations in Australia and overseas.

AI assurance framework guidance

This document provides draft guidance for Australian Government agencies completing assessments using the Pilot Australian Government AI assurance framework (the framework). Use it as an interpretation aid and as a source of useful prompts and resources to assist you in filling out the assessment.

This guidance document mirrors the structure of the framework, with each of its 11 sections corresponding to a section of the assurance framework.

Introduction

This document provides guidance for Australian Government agencies completing assessments using the Australian Government artificial intelligence (AI) assurance framework (the framework). Use it as an interpretation aid and as a source of useful prompts and resources to assist you in filling out the assessment.

In addition to this guidance document and the framework itself, make sure you consult the Policy for the responsible use of AI in government.

For more guidance, you may also wish to consult resources such as the:

- Voluntary AI Safety Standard

- New South Wales Government AI Assessment Framework

- Queensland Government Foundational artificial intelligence risk assessment (FAIRA) framework

- National framework for the assurance of AI in government

- Australian Government Architecture Artificial Intelligence (AI)

- National AI Centre (NAIC) Implementing Australia’s AI Ethics Principles report

- CSIRO Data61 Responsible AI Pattern Catalogue

- ISO AI Management System standard (AS ISO/IEC 42001:2023)

- Organisation for Economic Co-operation and Development (OECD) Artificial Intelligence Papers library

- United States National Institute of Standards and Technology AI Risk Management Framework.